TL;DR #

Query languages like KQL excel at fetching data, but analyzing results—finding patterns, running statistics, exploring iteratively—remains manual work. In this post, we share how we built a code execution sandbox that enables AI agents to analyze logs in real-time by dynamically generating and executing Python code. This capability powers multiple features across our platform, from automated data source mapping to intelligent query summaries and AI-assisted triage.

Introduction #

The Gap Between Fetching and Understanding #

Security teams face an ever-growing challenge: making sense of massive volumes of log data. Query languages like KQL excel at fetching data — filtering, sorting, and retrieving the exact records you need. But once you have those results, the real work begins.

An analyst examining 10,000 authentication logs needs to:

- Identify patterns and anomalies

- Perform statistical analysis across multiple dimensions

- Correlate events to build a timeline

- Distill insights into actionable findings

This is analysis, not fetching, and it’s fundamentally a different problem.

Consider these scenarios:

Example 1: Detecting Anomalous Login Patterns

“Are there any users with login times that deviate significantly from their historical baseline?”

A KQL query can fetch all login events, but then what? The analyst must manually review timestamps, calculate statistics per user, identify outliers, and determine what’s normal versus suspicious. With thousands of events, this is tedious and error-prone.

Example 2: Intelligent Query Summarization

“What are the key insights from these 10,000 failed authentication attempts?”

Rather than manually reviewing pages of results, security teams want automatic analysis: “Most failures (847 of 10,000) are from a single user ‘[email protected]’ across 3 IP addresses in the last hour—possible credential stuffing attack.”

Why Code Execution Agents? #

The fundamental problem is this: structured queries fetch data, but flexible code is needed to analyze it.

To bridge this gap, we need systems that can:

- Write custom analysis logic on demand - Not predefined queries, but dynamically generated Python code tailored to the specific question

- Iterate based on findings - Explore the data, form hypotheses, test them, and refine

- Combine multiple analytical techniques - Statistics, visualization, correlation, pattern matching—whatever the question demands

- Explain the reasoning - Not just return results, but show how conclusions were reached

This is where AI-powered code execution agents come in. By giving Large Language Models (LLMs) the ability to write and run code against log data in real-time, we transform them from question-answerers into data analysts.

The LLM doesn’t just respond with text — it:

- Writes Python code to explore the data

- Executes that code in a secure sandbox

- Observes the results

- Refines its approach

- Repeats until the question is answered

This iterative “ReAct” (Reasoning + Acting) loop enables sophisticated analysis that would be impractical with static queries alone.

The Problem: When Queries Aren’t Enough #

Building a system that lets AI agents execute arbitrary code against sensitive security data presents several critical challenges:

Safety and Isolation #

Executing untrusted code (especially code generated by an LLM) is inherently risky. We need strong isolation guarantees to prevent:

- Access to unauthorized data or systems

- Resource exhaustion (infinite loops, memory leaks)

- Interference between concurrent sessions

Performance at Scale #

Security analysts need answers quickly — within seconds, not minutes. But the nature of code execution agents makes this challenging:

Why it’s hard:

Each analysis involves multiple slow operations: LLM reasoning iterations (1-3s per call, typically 5-10 iterations), data loading from S3 (2-10s to read, decompress, and parse), and Python kernel initialization (1-3s). Without optimization, a simple analysis takes 20-40 seconds—far too slow for interactive use. The system must handle concurrent demand, minimize cold-start latency through pre-warming, and efficiently manage computational resources.

Stateful Analysis #

Many analysis tasks require multiple steps:

- Load and explore the data

- Perform initial analysis

- Refine the approach based on intermediate results

- Generate final insights

The challenge:

Unlike a single query that fetches and returns results, agentic analysis is inherently iterative. The agent needs to reference work from previous steps—filtered datasets, computed metrics, identified patterns—as it explores deeper. This requires maintaining execution state across multiple code runs within a session.

The architectural challenge: we need state to persist within a session (so the agent can build on its work) but be completely cleared between sessions (so tenant data never leaks). Standard stateless execution patterns don’t work here—we need something in between.

Integration with AI Agents #

The code execution environment must be seamlessly integrated with LLM-based agents. This is harder than it sounds.

The challenge:

The agent generates text (Python code), but we need to execute it, capture results in multiple formats (text, dataframes, plots, errors), and present those results back to the agent in a way it can reason about. Different data sources (S3, Parquet, CSV) require different loading patterns, but we want the agent to work uniformly across them.

Key integration requirements:

- Clear execution interface: Simple “execute code, get result” API that handles the complexity underneath

- Structured error handling: Errors must be returned in a format the agent can parse and learn from

- Multi-format results: Capture text output, return values, dataframe previews, and error tracebacks

- Data source abstraction: Agent shouldn’t need to know if logs are in S3 Parquet vs compressed JSON—just that

dfis ready to analyze

Building the Solution: Two Layers of Intelligence #

We built a robust, scalable code execution infrastructure combined with an intelligent agent framework for log analysis. The key insight: separate the concerns of safe code execution from intelligent analysis.

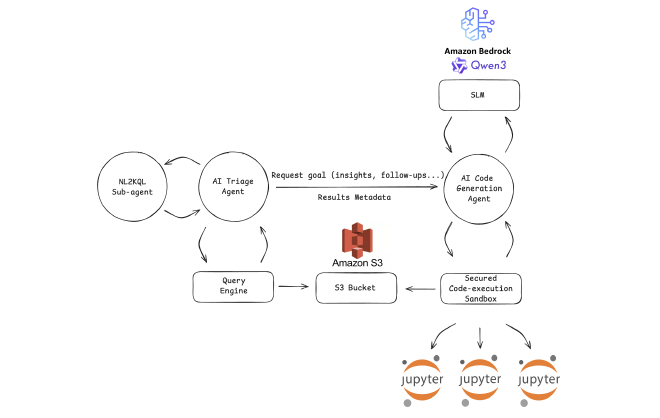

Architecture Overview #

Our solution consists of two main layers:

- Code Execution Sandbox: Provides isolated, secure environments for running Python code

- Log Analysis Framework: AI agents that generate code, interpret results, and iterate toward insights

Let’s explore how we solved the key challenges.

Layer 1: Making AI-Generated Code Safe to Run #

The Foundation: Jupyter Kernels #

We built our sandbox on Jupyter kernels—the same technology powering interactive notebooks. They provide:

- Statefulness: Variables and imports persist between executions

- Rich outputs: Support for text, dataframes, plots, and more

- Mature ecosystem: Battle-tested in data science and research

But Jupyter was designed for interactive, trusted use—not for running AI-generated code against production data. We needed additional layers of protection.

Stopping Data Exfiltration: Network-Level Isolation #

The most critical security requirement: the sandbox cannot access unauthorized resources.

Process-level isolation (separate kernel processes) isn’t enough. The risks of inadequate isolation are severe:

What could go wrong:

- Data exfiltration & compliance violations: Malicious or buggy code could call external APIs, sending sensitive customer data to unauthorized endpoints or violating data residency requirements

- Infrastructure compromise: Access to internal services could enable lateral movement, allowing the sandbox to probe or attack other systems, or download malicious packages from the internet

Even well-intentioned AI models can accidentally generate code that makes external calls. We can’t rely on the LLM to “be careful.”

Our solution: Network-level isolation using security groups.

The sandbox environment is placed in a restricted network segment that allows only:

- DNS resolution (for basic functionality)

- AWS STS authentication (for assuming the minimal required IAM role)

- S3 read access (for loading log data from approved buckets)

That’s it. No internet access, no internal service calls, no other AWS APIs. Even if malicious code runs, it can’t reach anything beyond the specific S3 data it’s authorized to analyze. The network itself enforces the boundary—no trust required.

Speed Without Compromise: Kernel Pooling #

Creating fresh execution environments for every request is too slow. We need sub-second response times. But reusing environments introduces new risks.

What could go wrong:

- Data leakage: Without proper state cleanup, one tenant’s data could leak into another’s session—a catastrophic security failure

- Resource exhaustion: Runaway analyses (infinite loops, memory leaks) could consume all resources, bringing down the service for everyone

- Performance degradation: Without pooling, cold starts (1-3s per kernel) make every request feel sluggish; without cleanup, zombie processes accumulate until manual intervention is needed

The trade-off: reuse for performance vs. isolation for security. We need both.

Our solution: Kernel pooling and session management.

We maintain a pool of pre-warmed execution environments:

- Pre-warming: Create kernels during idle time, not when users are waiting

- Dynamic scaling: Scale from minimum baseline to maximum capacity as demand increases

- State cleanup: Reset kernel state between uses to prevent data leakage—this is critical for security

- Failure recovery: Automatically replace unhealthy kernels before they cause user-visible failures

Sessions are tied to specific analysis tasks, with automatic expiration:

- Time-to-Live (TTL): Sessions expire after inactivity to reclaim resources—prevents slow resource leaks

- Background purging: Expired sessions are cleaned up automatically without user intervention

- Resource limits: Cap maximum concurrent sessions per tenant—prevents noisy neighbor problems

This approach gives us the performance of long-lived processes with the isolation guarantees of ephemeral execution.

Layer 2: Teaching AI to Think Like an Analyst #

With the code execution sandbox in place, we built an AI-powered log analysis framework on top of it.

How the Agent Thinks: The Iterative Analysis Loop #

Traditional LLM applications follow a simple pattern: question → answer. But complex analysis requires iteration.

We use the ReAct (Reasoning + Acting) pattern, where the agent:

- Reasons about what to do next (“I need to check how many unique users appear in the logs”)

- Acts by writing Python code (

df['user'].nunique()) - Observes the execution result (“147 unique users”)

- Refines its approach (“Now let me find which users have the most failed attempts…”)

This loop continues until the question is answered.

The key advantage: the agent doesn’t need perfect code on the first try. It can make mistakes, see the errors, and self-correct. Just like a human analyst exploring data interactively.

Choosing the Right Model: Performance vs. Quality #

When building code execution agents, model selection is critical. Large frontier models like Claude Sonnet or GPT-4 produce excellent code, but their latency can be prohibitive for interactive use.

Remember our earlier calculation: if each LLM call takes 2-3 seconds and a typical analysis requires 5-10 reasoning iterations, that’s 10-30 seconds just waiting for the LLM—before any code even executes.

Our approach: Comprehensive model evaluation

A critical constraint: models must be available on AWS Bedrock. For security and compliance, we cannot send customer data to third-party APIs. Bedrock allows us to use models within our AWS infrastructure while maintaining complete data isolation.

We evaluated models available on Bedrock across two dimensions:

- Output quality: Correctness and sophistication of generated Python code for various analysis tasks (statistical analysis, data exploration, anomaly detection, etc.)

- Latency: End-to-end response time for reasoning and code generation

The evaluation covered a wide range of models, including examples such as:

- Large frontier models (e.g., Claude Sonnet, DeepSeek R1)

- Mid-size general models (e.g., Claude Haiku, Llama, OpenAI GPT-OSS)

- Specialized coding models (e.g., Qwen3-Coder, IBM Granite Code)

The winner: qwen3-coder:30b

We ultimately chose Qwen3-Coder 30B, a specialized code generation model available on Bedrock that strikes the optimal balance:

- Fast inference: 300-800ms per call (3-4x faster than frontier models)

- Decent code quality: Not quite Claude-level sophistication, but more than adequate for log analysis tasks

- Bedrock-hosted: Runs within our AWS infrastructure, maintaining compliance while reducing latency

For a typical 7-iteration analysis:

- Claude Sonnet: 2.5s × 7 = 17.5 seconds in LLM time

- Qwen3-Coder: 0.5s × 7 = 3.5 seconds in LLM time

That 14-second improvement is the difference between an interaction feeling sluggish versus responsive. Combined with our kernel pooling and concurrent data export optimizations, end-to-end analysis (from initial question through all LLM iterations, code execution, and data processing to final insight) completes in 3-5 seconds—fast enough to feel interactive.

The trade-off is worth it: slightly less elegant code, but a dramatically better user experience.

Putting It to Work: From Data Onboarding to Autonomous Triage #

This infrastructure powers several key features in Vega:

1. Data Source Mapping #

When customers connect a new data source, we need to identify what it actually is—is this AWS CloudTrail? Microsoft Entra ID? Okta? The same underlying data can come from different collection methods, making identification non-trivial.

The agent:

- Loads a sample of logs from the newly connected index

- Analyzes field names, value patterns, data structures, and event types

- Compares against known data source signatures

- Identifies the data source with confidence scores and reasoning

This previously required manual analysis by security engineers examining sample logs. Now it happens automatically in seconds, enabling faster data source onboarding.

2. Intelligent Query Summaries #

When users run queries that return thousands of results, we automatically generate summaries:

“Most failed logins are from a single user ([email protected]) with 847 failures from 3 different IP addresses, suggesting a possible compromised account or brute force attempt.”

The agent can:

- Identify statistical outliers

- Detect temporal patterns

- Correlate across multiple dimensions

- Highlight security-relevant anomalies

3. AI-Powered Triage #

We’re building an automated triage system that combines our NL2KQL capabilities with log analysis to create a powerful investigation loop.

How it works:

The agent operates in an iterative cycle:

- Fetch: Uses NL2KQL to convert investigative questions into KQL queries that fetch relevant data (“Get all login events for this user in the last 24 hours”)

- Analyze: Applies code execution to process the fetched data—calculating statistics, detecting anomalies, correlating events

- Reason: Extracts insights from the analysis results (“User has 50x more failed logins than their baseline”)

- Investigate: Determines next steps and formulates new questions (“Were these attempts from unusual IP addresses?”)

- Repeat: Generates new KQL queries based on findings, closing the loop

This creates an autonomous investigation capability where the agent:

- Assesses severity based on historical context

- Correlates with related events across multiple data sources

- Identifies anomalies that require human attention

- Suggests specific investigation paths with supporting evidence

- Prioritizes alerts for analyst review with confidence scores

By combining NL2KQL for data fetching with code execution for analysis, the agent can iteratively explore security incidents—fetching precisely the data it needs and analyzing it deeply—all without human intervention until actionable findings emerge.

Conclusion #

By combining isolated code execution environments with AI agents, we’ve built a powerful capability that bridges the gap between data fetching and data analysis. Our code execution sandbox enables:

- Flexible analysis: No predefined query limitations—agents write custom code for each unique question

- Iterative exploration: Agents can refine their approach based on intermediate results

- Secure execution: Network-level isolation ensures AI-generated code can only access authorized data

- Real-time performance: Fast enough for interactive use with sub-second response times

This infrastructure has become a cornerstone of Vega’s AI-powered features, powering everything from automated data source mapping to intelligent query summarization and AI-assisted triage.

As security operations continue to generate ever-growing volumes of data, tools that combine the reasoning capabilities of LLMs with the analytical power of code execution will be essential for staying ahead of threats.

Want to see it in action? Stay tuned for demos, or reach out to learn more about how Vega is transforming cybersecurity analytics.

Further Reading: